Personalizing the Customer Experience

At 84.51˚, we believe the future of data science will be driven by open-source technologies and toolsets. That’s why we’re going all in. However, transitioning 14+ years of development comes with its own challenges. We want to talk about a small example of the work we’re doing to make this migration happen and how we’re building a more stable base for the future by leveraging open-source libraries and learning from their design.

The Personalize the Customer Experience (PTCE) team is responsible for targeting Kroger’s Best Customer Communications (BCC) mailers. These mailers are sent to millions of households, and each mailer contains personalized coupons and content. The existing BCC codebase is made up of dozens of individual SAS production scripts using Oracle SQL, developed over 14 years. We need to ensure that we have a comprehensive understanding of all the tasks being run to ensure we can complete those same tasks using open-source tools like Python and Spark.

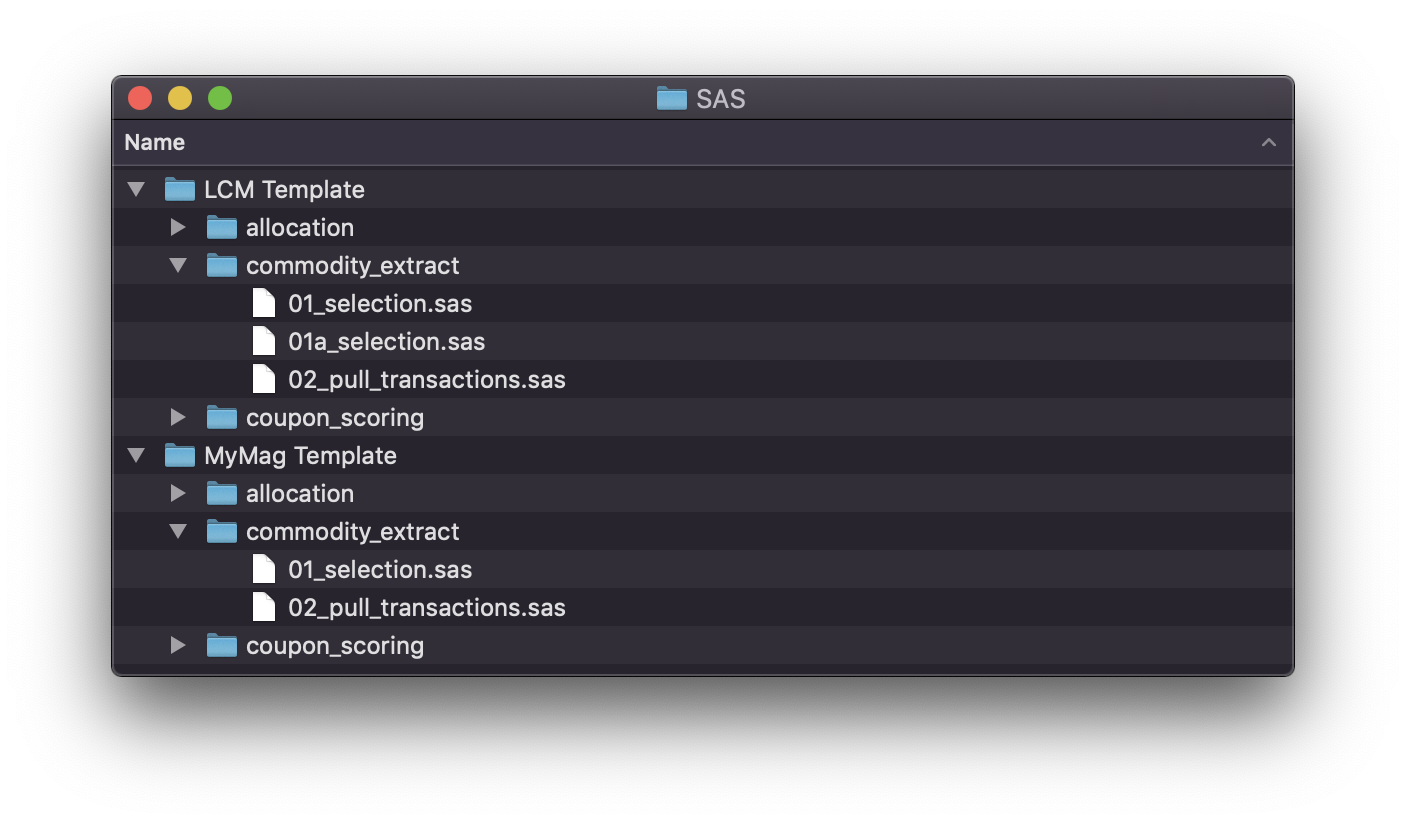

Campaign templates written with SAS and Oracle SQL

Like many legacy systems, our BCC codebase was originally written with a single type of campaign in mind and was slowly expanded beyond its original scope. Each of the subsequent campaigns branched off the original and made their own modifications or added additional steps for their targeting. Eventually, template directories were created for each campaign type – each containing all the scripts necessary for a campaign to be run. The simplicity allowed production teams to confidently create a new campaign, update variables in the header, and run the process end-to-end.

Individual Campaigns are just a copy of the template

While the templating system provided large benefits in reproducibility, it didn’t come without downsides. First, there was an alarming amount of overlapping code in each directory structure. And as new features were developed, or – more importantly – bugs were fixed, changes weren’t shared between teams without massive effort. In addition, these scripts were required to be individually updated with campaign data and manually run, sharing data with each other via intermediate files. As some campaigns held over 200 scripts, this was quite a massive undertaking for each campaign.

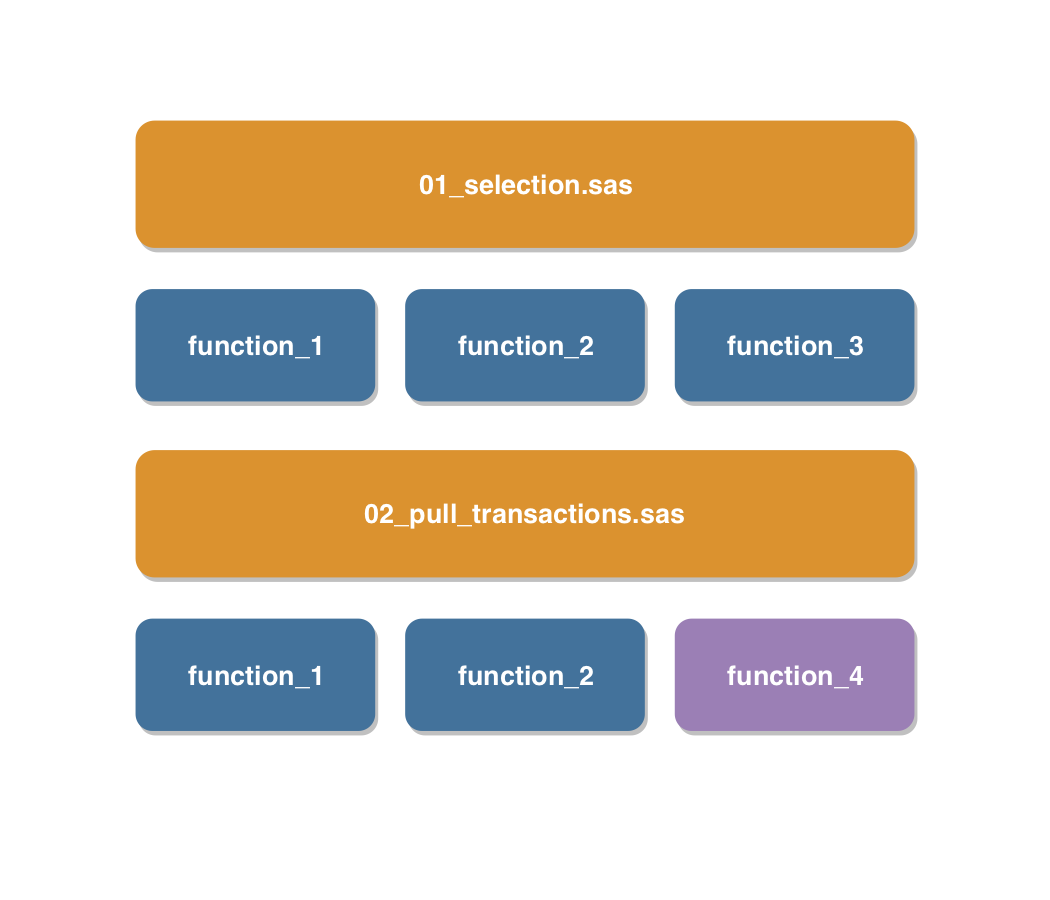

With the benefit of 14 years of learning under our belts and the opportunity to leverage more modern tooling, the PTCE team believed we could find another way. While large migrations can be a chaotic time in a team, we knew there would be no better time to improve on old processes. As we approached the targeting project, we wanted to avoid simply moving from 01_selection.sas to 01_selection.py during our transition to Python and Spark. Diving in to each script, we started to break their sections in re-usable python functions. As we moved through the process, we found a lot of opportunity to combine similar functions into composable ones that accomplished a similar goal in multiple scripts.

Breaking our SAS scripts into Python Functions

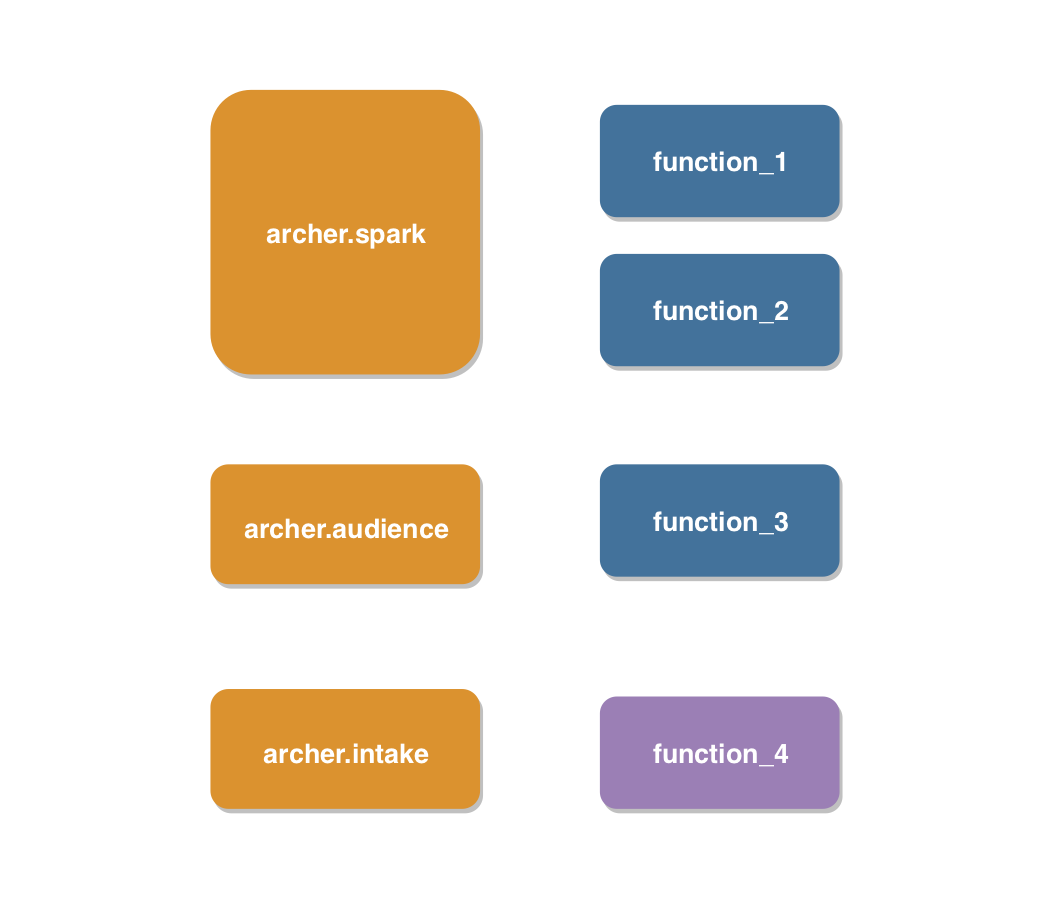

Finding ourselves with a collection of composable functions, we made the next logical step to create a Python library. Breaking each collection into a submodule, the Archer project was born. Archer was designed to be a collection of functions, with 14 years of BCC experience baked in, to be used in your targeting campaign. Designed to be run anywhere Spark lives, it works both on-prem or in the cloud.

Some of the functions living under the Archer library

Despite long time familiarity with open-source tools like Pandas, the scale of our targeting campaigns are beyond its comfort zone – so anywhere we can, we leverage PySpark. Even for smaller campaigns, leveraging the tools for the large ones provides consistency and confidence in our system. But not everything is a great fit for Spark.

One area where Pandas still shines is testing. Its utilities like assert_frame_equal are essential to creating a well-tested library. A typical test in the Archer library follows a simple path:

Import expected output from .csv file using Pandas

Run tested function using sample data

Export output to Pandas DataFrame

Sort, normalize types

Run assert_frame_equal

In some cases, our code leaves the realm of DataFrames entirely and we drop down to pure Python. In these cases, we leverage libraries like dataclasses to avoid unnecessary overhead when working with millions of Python objects while maintaining readable code.

Finally, we combine the powers of DataRobot and PySpark to create and scale non-linear machine learning algorithms. Our process requires scoring millions of households on thousands of offers, so we need to apply our models to billions of rows of data. By passing DataRobot codegen jar objects to our Spark cluster, we are able to leverage highly sophisticated models to accomplish our massive scale scoring in a fraction of the time that it used to take.

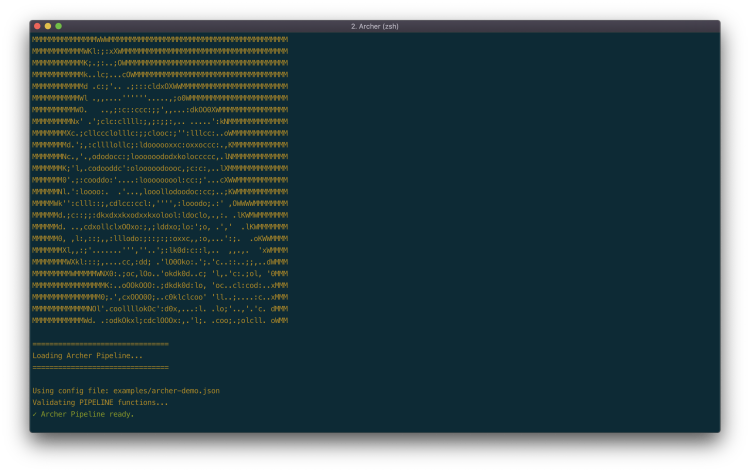

As we continued our transition process, we found that our composable functions were a better building block than previous scripts but still were not the end-to-end solution we were hoping for. While we use tools like Airflow extensively inside the company, we found our workflow inspiration in another open-source library: scikit-learn. Its pipelines for applying transforms to data closely matched what our functions were accomplishing with our Spark DataFrames. With this in mind, we expanded Archer to include an optional CLI function to run from a config file that defines the steps to run, any required inputs, and campaign-specific data. Defining these once per campaign instead of 200 times was already a huge productivity benefit, but with the pipeline handling running steps and managing the intermediate data internally, campaigns could be kicked off in one line.

Our Archer Pipeline in action

One issue that continued to plague us was the inevitable forgotten step or unset option – which you don’t discover until running it at full-scale and getting 20-hours into the process. Hoping to find issues early on, we built a dry-run system that runs and inspects each step in your process to determine what data it expects and creates, as well as any options it expects to read. If it can’t find an expected input from a previous step – or an option you needed to define – it issues a friendly error message and perhaps saves you hours of frustration!

An important part of creating a reliable library is building confidence, both for yourselves and the users of the library. With that in mind, we built a process around Git, Docker, and CI tools to ensure we were pushing a well-tested product. Using Docker Compose, we build a small Spark cluster as well as an associated container in which we can develop and run our CI tests. Only once tests have passed on a branch can that branch be merged into master. This process has created up-front accountability and ensures we understand the impact of our changes. Of course, a testing process is only as good as the tests we write so we remain vigilant in our quest for 100% code coverage and ensure the tests are capturing the expected action of each function.

So, in summary, what has moving to the open-source world brought to our targeting process?

Composable, shared functions (One place to fix bugs)

Git branching / history (Easy to test, add features, understand changes)

Confidence when making changes (Continuous testing)

Automated pipeline (Reduced surface for mistakes)

Three week runtime to three days

We’re excited to keep moving forward with the Archer project!

Visit our knowledge hub

See what you can learn from our latest posts.