Making the Best Out of Constraints

"Every task involves constraint, Solve the thing without complaint; There are magic links and chains, Forged to loose our rigid brains. Strictures, structures, though they bind, Strangely liberate the mind." - James E. Falen

You spent the last few months working out a method of ranking recipes a customer might like, based on what they buy at the grocery store. You’re about to mail each customer a booklet of recipes personalized just for them. This isn’t mass marketing of generic, popular recipes. Of the hundreds of thousands of booklets mailed, most will be unique. For digital communications it’s not that unusual, but physically printing that many unique copies in a cost-effective way is impressive.

In fact, it’s too good to be true. It turns out, to print the booklets in a way that isn’t cost prohibitive, only about 50 unique versions can be produced. Suddenly you’re working under a new, very tight constraint and the deadline is approaching. To get a sense for how restrictive this is, imagine you had a pool of only 100 recipes and each booklet contained 10 recipes. In that case, you have 100 choose 10 booklets (about 17 trillion) and you can give the very best one for each customer. Instead, due to this new constraint, you have 50 booklets to choose from

universe

17 trillion

dots

50. A lot smaller than 17 trillion

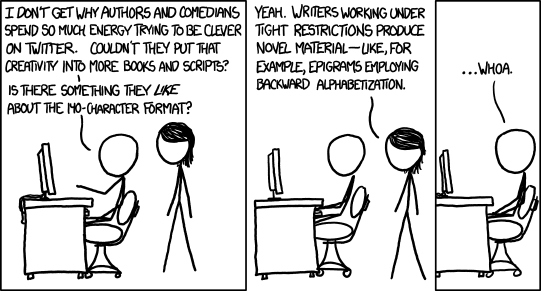

Projects with tight constraints can be frustrating. But as the introductory poem points out, they can also free our minds to look at things in a new way in order to get around the constraint. When we ran into the situation above, we tried a few different approaches and ultimately had to recast the problem in a new light.

Attempt #1: Most Popular Booklets

The first solution was to select the 50 most popular booklets. The approach looked like this:

Identify the best booklet for each customer as if there were no constraints (this is simple, since recipes are already ranked for each customer)

Identify how “popular” each booklet is (e.g. if a booklet is best for 100 customers)

Sort by popularity and use the top 50 booklets.

For each customer, identify which of the 50 booklets had the highest average recipe rank for that customer.

This had some intuitive appeal. It would select common combinations while ignoring outliers that only a few households preferred. While it probably was suboptimal, it seemed like a sensible heuristic given given our time constraints. A sensible, short term fix. Right?

chicken

Winner of the Popularity Contest

When we tested this approach, we ended up with 50 booklets of chicken recipes. Most of the booklets had the same first 7 recipes with the 8th-10th chicken recipes swapped out for other chicken recipes. Clearly this was not the result we wanted. We knew our customers had broader tastes, with some preferring beef, pork, fish, or vegetarian options.

Why was this happening? We expected to see more chicken than anything else since it’s the most popular, but why was there not a single popular recipe outside of chicken? When the number of possible booklets is in the trillions but the number of households is merely hundreds of thousands, the likelihood that two customers will have the same perfect book is pretty slim. This is clear when we look at the frequency distribution of booklets.

no_arrow

The distribution had an extremely long tail of booklets that were the best for only one household. Even the most popular booklets were the best for only 0.2% of customers. When we think about the kinds of customers who prefer books on the extreme left of the frequency distribution, it makes sense that they would be customers with very middle-of-the-road tastes and shopping habits. After all, if you had trillions of options to choose from, but you chose the exact same option as many other people, your preferences must not be terribly unique. It’s no surprise that these customers converged on that ever-exciting ingredient: chicken.

To look at it visually, an annotated chart might look like this:

arrow

As we move towards the tail of the distribution, we get more unique and interesting booklets of recipes. We start seeing booklets with less common recipes like seafood or vegetarian options. This inspired us to try option 2.

Attempt #2: Random Booklets

Choosing the most popular books left us cramped over on the left side of the distribution with all chicken recipes. What if instead we randomly sampled 50 booklets from that distribution? After all, the goal is to find 50 booklets that (somehow) best represent the 17 trillion possible ones (or at least the several hundred thousand that were the best fit of some of our customers). The purpose of random sampling is just that – to get a representative group. After random sampling, proceed as before: for each customer, identify which of the 50 random booklets had the highest average recipe rank for that customer.

Before we tested it out, we needed a way of objectively comparing the random sampling method to the popularity based method (beyond just visually inspecting to make sure we don’t have all chicken recipes). As mentioned before, each recipe was ranked from 1-100 for each customer. One of the simpler ways to evaluate the two methods is to compare the average recipe rank each customer received for method 1 vs method 2. We also looked at the variance of those scores across customers. High variance would indicate some customers are getting booklets that are a very good fit, while others’ booklets contain irrelevant recipes. Low variance indicates most customers have similar scores.

Sure enough, the randomized method had a better mean recipe rank and tighter variance than the popularity based method. Further, it had much better variety beyond chicken recipes. This illustrates the importance of selecting a good benchmark for any analysis. Even an obvious “dummy” benchmark such as always predicting the mean or guessing randomly can be useful. In this case what at first glance appeared to be a sensible heuristic (choosing based on popularity) actually ended up being worse than a totally random dummy benchmark.

Surely the dummy benchmark of picking booklets at random wasn’t the best we could do. How else could we formulate our problem? Our problem is to choose the best combination of booklets (where each booklet is itself a combination of recipes) in a way that maximizes the mean recipe rank, subject to the constraint that there are exactly 50 booklets. It’s further complicated by the fact that recipe rank is not fixed but varies for each customer according to tastes and preferences. For those familiar with it, this sounds like a very complicated version of the (knapsack problem)[https://en.wikipedia.org/wiki/Knapsack_problem]. On the one hand, this is good since there is a lot of literature on the problem. On the other hand, that literature claims it’s an extremely computationally complex problem to solve. With hundreds of thousands of customers involved, it’s unlikely a direct optimization solution would be feasible.

How else to approach the problem? When we manually inspected the booklets that were randomly chosen, we noticed some of them overlapped with each other heavily. Sometimes one booklet shared 9 out of 10 recipes with another booklet. With only a precious few booklet versions to print, it seems wasteful and redundant to choose two versions of what are essentially the same booklet. We wanted to choose 50 booklets in such a way that each customer’s ideal booklet was “close” to one of the 50, and each of the 50 were sufficiently “far” from each other to avoid redundancy. This led us to try our third method.

Attempt 3: Clustering Booklets

Choosing booklets that are “far” from each other but “near” customers’ ideal books is a lot like maximizing inter-cluster distance but minimizing intra-cluster distance. But to cluster the booklets, we need a way of representing each booklet in euclidean space or, at the very least, calculating pairwise distances between booklets. We hinted at the notion of similarity between booklets earlier: recipe overlap. We can represent each booklet as a 100 dimensional binary vector, where the ith element indicates whether recipe #i is present or absent from that book, like so:

booklet = [0,0,1,0,1,...,0,1]

Since each booklet has ten recipes, each vector sums to 10. Once each booklet is encoded this way, we create a table where each record is a customer’s best book. The resulting table has 100 columns and N rows, where N is the number of customers:

Chart that shows clustering booklets

We can then run a clustering algorithm on this table using a distance metric such as hamming. This should effectively group customers together whose ideal booklets have a lot of overlapping recipes. Each cluster center represents one of our ideal 50 booklets. Since clustering algorithms are designed to keep cluster centers far from each other, we can expect minimal redundancy among the final 50 booklets.

This is a somewhat unorthodox use of a clustering algorithm, but it makes things a bit easier than traditional clustering for a few reasons.

We know the number of clusters to be 50, since we can only print 50 versions. So the task of choosing an appropriate number of clusters, which is typically artful and inexact, is solved for us.

We don’t have to worry about clusters capturing “real” groups. Our problem is to optimize average recipe rank, not segment customers according to some “true” underlying distribution. So we can ignore over- and under-fitting.

Similar to #2, we don’t have to worry about the clusters generalizing well to other, unseen recipe booklets not in our training set. This is because we are not building a general model that will be reused on future sets of recipes with unknown ranks. The ranks are known. The recipes simply need to be allocated in a way that maximizes the ranks.

When we tested this approach, we found a significant improvement in average recipe rank and modestly tighter variance in ranks across households – a success in both metrics!

It’s never ideal to suddenly have a constraint you weren’t expecting. However, in this case it forced us to reframe our problem in a few different ways. Ultimately it helped us see clustering algorithms in a new light and use them to solve a problem they were never meant to solve. Strictures, structures, though they bind, strangely liberate the mind.

Visit our knowledge hub

See what you can learn from our latest posts.