Automation at 84.51˚

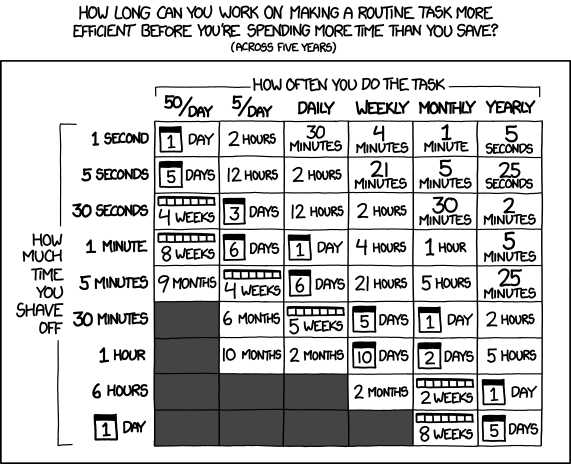

Automation is something of a buzzword in technical fields, including data science. Every mid- to large-size company has hundreds of tedious workflows that demand human effort on a regular basis; abstracting away these tasks via tool development can save a team many man-hours each week. But when considering automation, the time-savings offered by a solution must be measured against how much effort is required to develop that solution. In fact, this concept comes up so often that xkcd offers a helpful guide:

When identifying the work that most merits automation, data scientists should look for tasks that take a long time (downward on the table) or tasks that are done very frequently (leftward on the table). An easy target is non-trivial but essentially identical code used across a team or department - particulary if that that code solves a company-specific problem. For example:

Connecting to a central data mart with credentials

Interacting across environments

Each of these items take a meaningful amount of time on every project (or even every time an analyst starts working for the day). At 84.51˚, we’ve found several opportunities to abstract these away tasks, and we’ve organized that functionality into two major internal packages - one for R and one for Python.

effo (Eighty Four Fifty One)

effo, our internal R package, started as a way to simplify database connections. Simple functions allowed users to import or export tables with just one line of code. Once we established the base, more functionality was added such as importing the results of a query and some rudimentary database helper functions.

The next step in effo was developing functions and templates to assist with our company branding. This started with a function that loaded the RGB values of the company colors into a user’s environment then evolved to include a ggplot theme and an RMarkdown template. A template for Shiny apps is under development and soon to follow.

py_effo

effo’s Python cousin, py_effo, also focuses on database connections first and foremost. Because Python is relatively new to 84.51˚ and our analysts typically have backgrounds in statistics (as opposed to computer science), few people are comfortable interfacing directly with cx_Oracle. cx_Oracle is Oracle’s Python API, but it can be difficult to use without thorough knowledge of databases (for example, manual cursor management is unavoidable). To simplify the use of Python in our environments, we built py_effo to abstract data movement into a handful of concisely-named methods:

import_table

export_table

query

Though we originally developed it for Oracle database interaction, we saw an opportunity to extend py_effo beyond just Oracle; we built up the same functionality for Hive, to help analysts access HDFS. And to enforce these methods on all future database connections, we implemented an abstract DatabaseConnection class, from which the OracleConnection and HiveConnection classes inherit. An ImpalaConnection class is currently being explored, which would - of course - also derive from DatabaseConnection.

Development

The idea to develop an internal R package was sparked by a conversation between two data scientists discussing functions they had written. They quickly recognized that there was some overlap and there would be tremendous benefits from formally organizing the functions into a package. Many of the functions had broad applicability across the analyst community, so a single good solution could help many people. That afternoon they booked a meeting room, and effo was born. Rather than developing personal functions in silos, creating a package made it easier to collaborate and develop a better product together. After augmenting the R package with documentation and tests, they shared it with the analyst community so everyone could benefit.

Similarly, py_effo was originally a side-of-desk project for two data scientists on our Digital team. Analysts at 84.51˚ have historically used R more frequently than Python, but in 2016 our Digital team began migrating its codebase to Python, and thus arose the need for a Python equivalent to effo. Over several weeks, two members of the team identified pain points (primarily database connections) and built up a codebase to address them.

Both packages are currently hosted on GitHub Enterprise, which allows all of our scientists to contribute improvements through pull requests. Just as importantly, GitHub makes it simple for users to log issues even if they’re not interested in contributing their own code.

Socialization

Socialization of internal tools presents a totally different (and equally daunting) challenge from development. Every organization struggles with teams becoming siloed, and 84.51˚ is no different. However, good information-sharing practices can mitigate these problems.

Fortunately, effo and py_effo solve real needs - so others are usually happy to adopt them once they see the benefits. The bigger challenge is in spreading awareness of the packages and understanding of what they do. At 84.51˚, we’ve had success by focusing on three strategies:

Documenting them well

Incorporating them in internal trainings

Using them in internal example code

Documentation is the single most important element of socialization. Analysts can’t use a tool that they don’t understand. In both effo and py_effo, the package developers made documentation a first-class goal, documenting each new function or class as they went. This has resulted in a sizable but easily consummable record of function descriptions, parameters, and return values.

Internal trainings for R and Python are often targeted at analysts who have yet to form habits with these languages. This makes these trainings an ideal opportunity to help others add effo and py_effo to their workflows permanently. By referring analysts to the documentation and the code examples (which incorporate the internal packages), we show them where to look in the future for usage patterns.

Maintenance

Like any major project, an internal package demands ongoing maintenance. At 84.51˚, one data scientist owns each package, and is responsible for moderating the GitHub repo by managing pull requests, addressing known issues, and handling feature requests. But a major internal tool requires more than just one person. Both effo and py_effo rely heavily on community contributions and bug logging; the package owners are just those responsible for taking (or assigning) action based on community feedback. Work on the packages remains side-of-desk, but as more analysts come to rely on these tools that may change.

Visit our knowledge hub

See what you can learn from our latest posts.