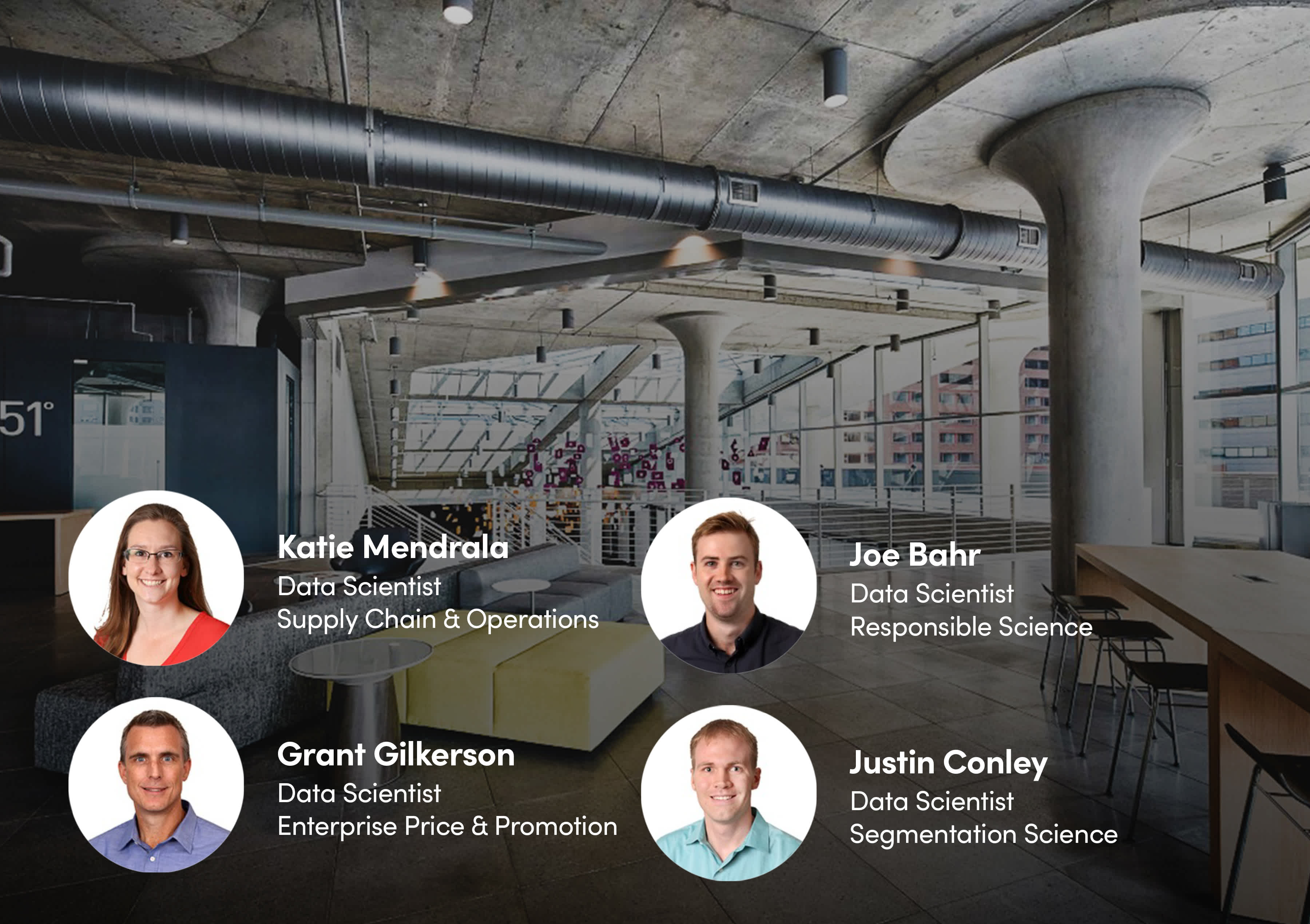

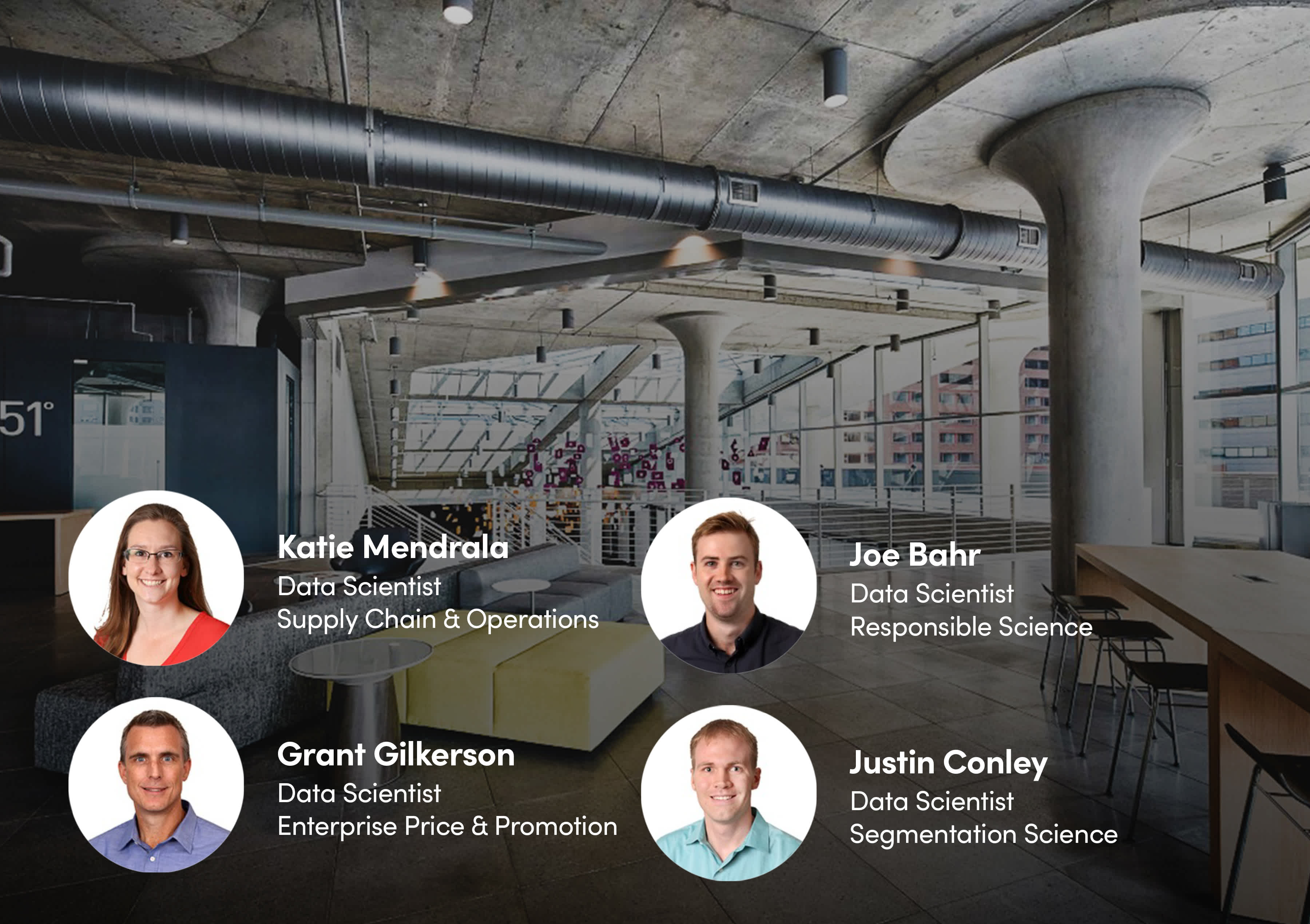

Responsible AI and algorithm fairness

Welcome to 84.51° Data University, a series of quarterly insights for prospective and current data-science professionals.

Can you tell me about this group and what it does?

To do this, we work directly with Kroger and 84.51° teams to identify and remediate potential areas of algorithm bias, e.g. AI models that perform differently across protected groups of individuals. Think of it as internal consulting backed by principles.

Why do you think this group’s work is needed?

The use of algorithms within our society is rapidly growing. Today, algorithms influence and direct many decisions and product functions that impact the lives of individuals. Bias can be unintentionally introduced into these models via various manners with one of the more prominent being the data for which these models are trained and developed. This data often has embedded biases that are currently and have historically been present within our society. As algorithms continue to find more and more uses that influence our lives and relationships with others and institutions, data science and engineering sits at a new forefront in the fight against discrimination.

As practicing data scientists and engineers, we have an important role to play in combatting this discrimination by preventing, discovering and remediating against harms that may come to individuals via (biased) algorithms.

“While data scientists have a key role within the pursuit of eliminating algorithmic bias, every individual within a product team building software or tools has a role to play.”

Do you have examples of how this work has helped us mitigate bias, advocate for gender diversity or champion underrepresented populations?

Yes! 84.51° teams are asked to evaluate their applied algorithms/models to ensure the models reduce or eliminate:

The perpetuation of negative gender/racial/age-based stereotypes, i.e. eliminate representational harms

The unfair allocation of resources, e.g. personalized coupons or awareness of a sales event, that benefit our customers, i.e. allocation harms

How do you think your experience as a data scientist drives change and impact in this space?

Data scientists possess the expertise and curiosity to evaluate these systems, beginning with the data used for their development as well as conducting thorough evaluation of the algorithm’s output to identify potential biases, and if found, data scientists have the required skills and expertise to correct for these biases, i.e. reduce or eliminate, before they are placed into a live production setting.

We also have an opportunity and responsibility to consult back with the business as technical translators to educate on not only the impacts that bias can have, but also in how this can happen unintentionally and behind the scenes.

Is there anything else you all would like to share?

While data scientists have a key role within the pursuit of eliminating algorithmic bias, every individual within a product team building software or tools has a role to play. For example, ensuring your product or software does not harm individuals due to the presence of bias should be established within the product’s success criteria and/or a key result to meet the product’s objectives.

Visit our knowledge hub

See what you can learn from our latest posts.